DeepSeek AI is a major advancement in the field of open source language models. It delivers powerful analytics capabilities—without the need for specialized GPU hardware. Combined with Ollama, a lean model management tool, this creates an efficient solution for running AI models locally while optimally controlling data protection and performance.

Why host DeepSeek yourself?

If you run DeepSeek on your own jtti server, you benefit from increased privacy and security. This is especially important since security researchers recently discovered an unprotected DeepSeek database containing sensitive data such as chat logs, API keys, and backend details.

With self-hosting, all information remains within your own infrastructure. This helps you avoid security risks while ensuring your environment is GDPR and HIPAA compliant.

Revolutionary CPU-based performance

DeepSeek models run efficiently on CPU-based systems—without the need for expensive GPU hardware. This makes advanced AI accessible to more users. Whether you use a 1.5b or 14b model, DeepSeek runs reliably on common server configurations, including virtual private servers (VPS).

Local Control and Maximum Flexibility

With self-hosting, you have full access to the API and full control over model settings. This allows you to customize DeepSeek, optimize security measures, and integrate it seamlessly into your existing systems.

Because it is open source, DeepSeek provides full transparency and enables continuous community improvement. This makes it the perfect choice for anyone who values performance and data protection.

DeepSeek on jtti: Step-by-step Installation

System Requirements

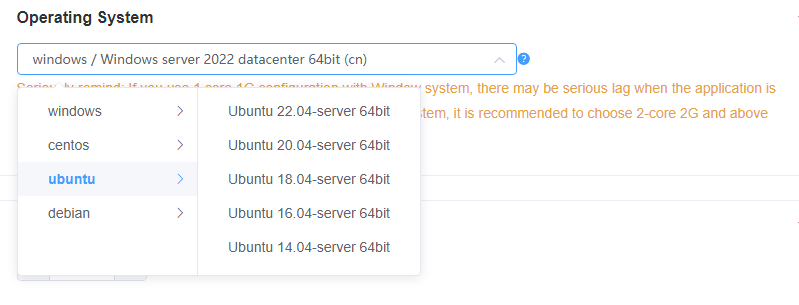

Before installing DeepSeek on your jtti server, make sure you are running Ubuntu 22.04 LTS on your system.

Note: We recommend using our VDS M-XXL models as they provide the resources needed to achieve optimal performance. Less powerful plans may work, but they will definitely affect the performance of the LLM.

Exclusive: jtti Pre-configured DeepSeek Images

jtti sets a new standard as the first and only VPS provider to offer pre-configured DeepSeek images. This eliminates the complex setup process and you can get started in minutes.

Our pre-configured images ensure optimal performance settings and a ready-to-use environment.

To select a pre-configured DeepSeek image during server setup:

1. Go to step 4 “Image” in the Product Configurator

2. Select a DeepSeek image from the available options

3. Continue with server configuration

With this preconfigured image, you can get started right away and still have enough freedom to further test and optimize the performance of your models. Once your server is deployed, you can use DeepSeek right away – without any additional installation.

Manual Installation

If you prefer a custom setup, you can install DeepSeek manually. The process is simple but requires a few steps. Here are the instructions:

In order for DeepSeek to run smoothly, your jtti server must be running Ubuntu 22.04 LTS.

First, make sure your system is up to date:

sudo apt update && apt upgrade –yNext, you can install Ollama. Ollama is a lightweight model management tool that handles the DeepSeek deployment process:

curl -fsSL https://ollama.com/install.sh | shOnce installed, you need to start the Ollama service to deploy your model:

sudo systemctl start ollamaNow you can retrieve the DeepSeek model you need. Version 14b provides an excellent balance between performance and resource usage:

ollama pull deepseek-r1:14bOnce the download is complete, your server is ready to use DeepSeek AI.

Model Deployment

After installation, you can use DeepSeek directly. Run the model directly to use it interactively:

ollama run deepseek-r1:14bIf you want to integrate DeepSeek into your application, start the API service:

ollama serveCheck Installation

Make sure everything has been installed correctly by performing the following checks:

ollama list

ollama run deepseek-r1:14b "Hallo, bitte überprüfe, ob du korrekt arbeitest."If these tests succeed, your DeepSeek setup is ready!

Model Compatibility and System Requirements

Model Size Overview

DeepSeek bietet Modelle in verschiedenen Größen, die auf unterschiedliche Anforderungen zugeschnitten sind:

| Modellversion | Größe | Erforderlicher RAM | Empfohlener Plan | Einsatzgebiete |

| deepseek-r1:1.5b | 1.1 GB | 4 GB | VDS M | Grundlegende Aufgaben, läuft auf Standard-Laptops, ideal für Tests und Prototyping |

| deepseek-r1:7b | 4.7 GB | 10 GB | VDS M | Textverarbeitung, Codierung, Übersetzungen |

| deepseek-r1:14b | 9 GB | 20 GB | VDS L | Leistungsstark für Codierung, Übersetzungen und Texterstellung |

| deepseek-r1:32b | 19 GB | 40 GB | VDS XL | Erreicht oder übertrifft OpenAIs o1 mini, ideal für erweiterte Analysen |

| deepseek-r1:70b | 42 GB | 85 GB | VDS XXL | Für Enterprise-Anwendungen, komplexe Berechnungen und fortgeschrittene KI-Analysen |

Je nach Anforderungen kannst du das passende Modell für dein AI-Hosting auf Contabo wählen.

Resource Allocation Tips

Efficient memory management is essential for optimal performance. Always provide twice the RAM of the model size to ensure smooth operation. For example, a 14b model (9 GB) runs best with 20 GB RAM for efficient model loading and processing.

Regarding storage, NVMe drives are the best choice for faster model loading times.

Why choose jtti for DeepSeek hosting

jtti's servers offer high-performance NVMe storage, high monthly traffic allowance (32 TB), and competitive pricing with no hidden fees. With data centers in 12 locations across 9 regions on four continents, jtti provides global coverage for your AI deployments. The network includes multiple locations in Europe, the United States (New York, Seattle, St. Louis), Asia (Singapore, Tokyo), Australia (Sydney), and India. The combination of reliable hardware and cost-effective plans makes it an ideal choice for self-hosted AI applications.

Final words on DeepSeek hosting

The combination of DeepSeek's powerful and efficient model and solid server infrastructure opens up entirely new possibilities for companies that value data protection and performance.

EN

EN

CN

CN